Evaluating Summer Reading Programs: Suggested Improvements

Summer reading programs (SRPs) have been a staple for many, if not most, public libraries since the turn of the twentieth century. Their popularity attests to the continuing value of encouraging reading among primary and secondary grade children in communities across the nation.

Each summer, during the annual hiatus from school, reading skills often decline. This phenomenon is sometimes called “summer loss,” “summer learning loss,” or “summer reading setback.” One consistent finding of a number of research studies is that “summer reading setback”— presumably the result of a lack of adequate reading practice—is a very real phenomenon. It impacts children living in poverty the most, and its effects are cumulative.

Over the last two decades, research continues to show that reading scores tumble while voluntary reading rates diminish as children move from childhood to late adolescence. One real discouraging note is that nine year-old children read more than their thirteen- and seventeen-year-old counterparts.

One of the common assumptions about SRPs is that they are valuable in many ways for the children participating. Reading practice improves word recognition, builds vocabulary, improves fluency and comprehension, is a powerful source of world knowledge, and is a way to develop understandings of complex written language syntax and grammar. However, most children do very little reading out of school, and only a small number of children read for extended periods of time.

While there is a plethora of literature pertaining to SRPs, there is little literature that focuses on the evaluation of these programs. What literature there is can be conveniently divided into two broad categories: summer school programs and public library SRPs.

The Impact of Summer School Programs

My review of the research that has been conducted about summer school programs suggests that:

- Students who do not engage in educational activities during the summer typically score lower on tests at the end of summer than they did at the beginning.

- Summer school programs that focus on lessening or removing learning deficiencies have a positive impact on the knowledge and skills of participants.

- Summer school programs focusing on the acceleration of learning or on other multiple goals also have a positive impact on participants roughly equal to remedial programs.

- Summer school programs have more positive effects on the achievement of middle-class students than on students from disadvantaged backgrounds.

- Remedial summer programs have larger positive effects when the program is run for a small number of schools or classes or in a small community.

- Summer programs that provide small group or individual instruction produce the largest impact on student outcomes.

- Summer programs that require some form of parental involvement produce larger, positive effects than programs without this component.

- Remedial summer programs may have a larger effect on math achievement than on reading.

- The achievement advantage gained by students who attend summer school may diminish over time.

- Remedial summer school programs have positive effects for students at all grade levels, although the effects may be most pronounced for students in early primary grades and secondary school than in middle grades.

- Summer programs that undergo careful scrutiny for treatment fidelity, including monitoring to ensure that instruction is being delivered as prescribed, monitoring of attendance, and removal from the evaluation of students with many absences, may produce larger effects than unmonitored programs.

- On most days the majority of children did little or no reading. A child in the 90th percentile in amount of book reading spends nearly five times as many minutes reading per day as the child in the 50th percentile and over 200 times as many

minutes per day as the child in the 10th percentile. - Summer reading setback is one of the important factors contributing to the reading achievement gap between rich and poor children.

- There is little difference in reading gains between children from high- and low-income families during the school year. Rather, the differences are the result of what happens during the summer.

- Children from high-income families make superior progress in reading over the summer, and over time the summer advantage can account for the social-class differences in reading achievement. This is a result of the fact that children from high-income families tend to read more over the summer.

- Children from lower-income families have more restricted access to books (both in school and out of school) than do their more advantaged peers.

- The more children read, the better their fluency, vocabulary, and comprehension.

Library Summer Reading Programs

The traditional SRP is designed primarily for elementary school children to promote independent reading during the summer vacation. A 1988 study defined effectiveness of a SRP as reaching a higher percent of the total number of school-age children.1 Libraries reaching more than 8 percent of the total child population were designated successful and an analysis revealed that successful libraries:

- were located in smaller communities;

- reached a mean of 11 percent of school-age children while unsuccessful libraries reached a mean of 3 percent (the range was from zero to 40 percent);

- produced a written evaluation report; and

- established goals and objectives for the SRP.

A survey of Pennsylvania and southern California public libraries found that SRPs are thriving, and attracting large numbers of children and families each year.2 These studies found that:

- Three-fourths of the libraries noted that circulation increases from 6 to 10 percent during the summer with the assumption that most of this increase can be attributed to the SRP.

- The majority of SRP children visit the library weekly.

- A third of the libraries report that the SRP attracts more than two hundred children.

- Children who attend summer library programs read on a higher level than those who do not attend—including those children who attend summer camp programs.

- Library events related to a SRP (crafts, songs, drama, storytelling, and puppetry) extend the reading experience.

- Summer library programs encourage parents to be involved in their children’s reading.

- Children participating in the SRP spend more time reading than their counterparts.

- Eleven percent of the parents reported that they spend more time reading with or to their children (fifteen hours or more per week).

- The amount of reading is substantial—36 percent of the children read one to twenty books; 17 percent read twenty-one to thirty books; 27 percent read thirty-one to fifty books; and 21 percent read fifty-one or more books.

- Participants are much more enthusiastic about reading.

- Teachers reported that 31 percent of the participants had maintained or improved their reading skills compared to 5 percent of nonparticipants.

Interestingly, the number of books read during the summer can make a difference. A number of studies suggest that reading four to six books over the summer helps readers maintain their skills, and reading ten to twenty books helps improve their skills.

Stephen Krashen asserts that free voluntary reading is the best way to better reading and language development.3 In short, practice makes perfect. Similar results have been found in several studies evaluating SRPs that have been conducted in

Canada, England, and New Zealand.

Evaluation Measures

Focusing on the evaluation of the SRP can be beneficial from several different perspectives. If the library is soliciting contributions from the community to support the SRP, an evaluation provides the numbers and the stories to make a compelling story. Thinking about the outcomes the library would like to achieve in the lives of the children and their families will help the library determine what kind of SRP the library should be providing and what kind of data it should be collecting to demonstrate actual impact.

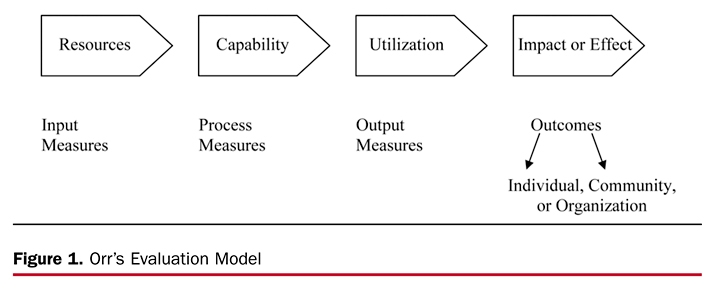

Using Richard Orr’s4 familiar evaluation model, it is possible to organize the many measures that libraries have used or could use in the evaluation of a SRP (see figure 1). Resources (inputs) are needed to organize and conduct the SRP. Children sign up for the program and a percentage of those who start will complete the program (outputs). And the children, their families, and the community will experience immediate and long-term consequences (outcomes).

Input Measures

Input measures typically include the monetary and staffing resources to plan, implement, and evaluate the SRP. Among the input measures that can be tracked are:

- Budget—Some portion of the budget will need to be devoted to promotional materials, marketing expenses, signage, and incentives (if used). Some libraries solicit funds from community organizations for the SRP so that, other than staff time, all the costs are borne by the donated funds.

- Staff—Library employees will be responsible for planning, conducting, and evaluating the program. In addition to tracking who is participating and what is being accomplished, some libraries ask staff members to also track the time they spend on the SRP. Additional staff or volunteers may need to be scheduled as a part of the SRP and to cope with the longer queues

waiting to check out materials. Measures might include:

● number of staff involved (FTE);

● number of volunteers involved (FTE); or

● number of outreach locations. - Collections—Some portions of the collection may need to be organized as a part of the SRP theme for that particular summer.

- Facilities—Space for the SRP may need to be organized.

Obviously the intent of the SRP theme and the use of marketing materials are designed to encourage children to sign up for the program. In addition to avid readers, the library needs to recognize that three other groups may be more resistant to the marketing message. These groups, called alliterates by Beers are people who can read but choose not to.5 The three groups of alliterates include:

- Dormant readers—People who do not find the time to read but who like to read.

- Uncommitted readers—People who do not like to read but indicate they may read sometime in the future.

- Unmotivated readers—People who do not like to read and are unlikely to change their minds.

Output Measures

Output measures are typically counts of an activity, such as a SRP. Among the measures that might be used by a library are:

- individuals registered for the SRP (children, teens, adults);

- SRP participants as a percentage of school-age children and teens;

- number of individuals attending program events;

- number of individuals completing the SRP (children, teens, adults);

- number of books the participants read;

- number of children who read a minimum of X books during the program;

- number of checkouts while the SRP was in operation compared to the prior year;

- number of pages read (children, teens, adults);

- amount of time spent reading alone (children, teens, adults);

- amount of time spent reading with a parent or caregiver (children, teens, adults);

- does the child spend more time reading with parent or caregiver;

- number of new library cards issued to families of SRP participants;

- number of SRP-related programs; and

- total attendance at SRP-related programs (children, teens, adults).

Outcome Measures

Outcome-based performance measures attempt to measure the effect of the library’s SRP in the lives of the children, their families, and ultimately upon the community. The SRP participants might show improvement in a skill level, knowledge, confidence, behavior, or attitude based on a qualitative or quantitative assessment.

In addition to focusing on the participating children (teens), it is also possible to involve the parents or adult caregivers and teachers in the assessment process, especially if the library wants to identify theoutcomes or impacts of the SRP.

Obviously it requires considerably more effort to gather outcome-based measures about the impact of a SRP than it does to gather output measures. In order for an outcome-based measure to resonate with decision makers it would need to meet at least two criteria: (1) The SRP and the outcome must be closely linked—there must be a cause-and-effect relationship, and (2) The outcome must be measurable in a consistent and reliable manner.

A Survey and Analysis

In order to better understand how public libraries are currently evaluating their SRPs, a brief survey was prepared.6 A Web-based survey was available from May 12, 2009, until June 30, 2009. Because this was a convenience or accidental sample rather than a random survey, only descriptive statistics are reported. A total of 264 responses were received from public libraries located in 31 states, which represents almost two-thirds of all states. The average size of the community of the responding libraries was 55,966 with the range of size quite variable (the maximum population was 381,833; the minimum size was 558).

Findings

Almost all public libraries keep track of the number of children who register for the SRP. The survey respondents indicated that 92.80 percent of libraries identify the number of children who register for the program. Almost as many libraries—some 79.17 percent of the survey respondents—track the number of children who complete the SRP. The opportunities for spending time in various summer activities that do not involve trips to the library are many. In addition, surprisingly few libraries calculate the percentage of SRP participants compared to school-age children in their community— only 13.64 percent of the respondents in this survey.

Because research has shown that the number of books read during the summer is important, some libraries track the number of books read. A little more than one-third (34.47 percent) of the responding public libraries track the number of books read during the SRP. And of the sixty libraries reporting data, the average number of books read per participant was twenty-seven. The range was from a low of two to a high of one hundred books per child.

Similarly, some 31.82 percent of the responding libraries track the amount of time spent reading by each participant. On average, each child reads 3.22 hours per week. The range was a low of 0.33 to a high of 18 hours per week. And only a very small number of libraries track the number of pages read by each participant, with some 4.17 percent of the libraries reporting they gather this information.

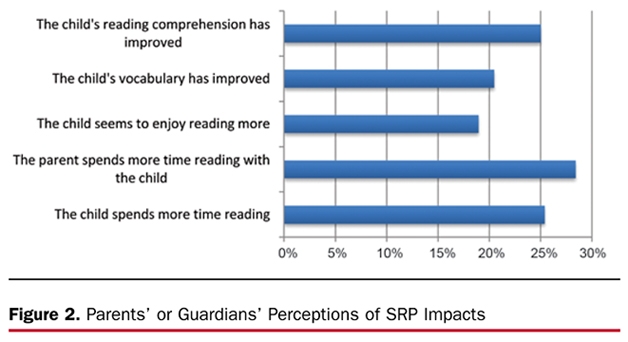

In addition, the library wishing to learn one important perspective is to ask parents or guardians about their views of the impact of the SRP in the lives of their children. A little more than one-fifth of libraries (21.59 percent) report that they involve parents or guardians in the evaluation process. Of the reporting libraries, the parents or guardians note that improvements have been made in reading comprehension, vocabulary has improved, and time spent reading increased between 20 to 25 percent of the time as shown in figure 2. It is particularly encouraging to see that parents spend more time reading with children.

The perspective of teachers might be valuable when assessing a library’s SRP. Can the teacher discern any change in the reading ability of the students? Has the reading comprehension and vocabulary of students improved? Have their writing skills improved? Twenty-five percent of the responding libraries indicate that they had conversations with teachers about the impact of the SRP in the lives of their students. Yet only 2.65 percent of the libraries partner with schools in the use of standardized reading tests to determine reading achievement as a result of participation in the SRP.

Almost two-thirds of the libraries (66.29 percent) offer a separate teen SRP, and some libraries even develop a SRP for adults. Slightly more than two-thirds of the responding libraries (69.30 percent) prepare a written evaluation report about the SRP.

Some of the respondents provided comments about how they identify other outcomes of the SRP. These comments suggest the interest of librarians to better understand the impacts of the program but clearly none of the approaches are systematic.

Implications

From the results of this survey, it is clear that public libraries could be and should be doing more to identify the impact of the SRP in the lives of the participants. If libraries were to adopt a multi-pronged approach in evaluating SRPs by gathering data about the use of the program and determining the impact from the perspective of the children, parents, and teachers, they would begin to develop the data that would resonate with funders.

A public library should identify a minimum set of performance measures that will be consistently gathered year after year. This will allow the library to track trends. Obviously data reported to the state library should be considered as part of the minimum set of measures that are tracked. These measures, subdivided by age of the participant, might include:

- the number of participants who sign up;

- the percent of participants who sign up compared to the total community segment of the population (the library should be setting goals to reach significantly more than 10 percent of the children);

- the number of participants who complete the program;

- the number of books read by each participant;

- the time spent reading per day by each participant;

- the time spent reading per day with their parents/caregivers; and

- data from a short questionnaire for participants and their parents or caregivers.

Periodically the library could include additional SRP assessment activities to complement the regular evaluation efforts. For example, the library may want to discover the degree to which SRP participants continue to use library resources once the program has concluded. Or the library could compare where participants live with the location of all children in the community.

Specific recommendations for improving a library’s SRP include:

- Calculate the number of SRP participants as a percentage of school-age children. A library can quickly obtain information about the number of children in its community using census data. Every public library should be making this calculation as a part of their program goal setting process.

- Set participation goals that are aggressive.

- Developing a better understanding of the reasons why children drop out of the SRP would allow a library to design a program that is more compelling and keep the attention (and participation) of children during the summer.

- Focus outreach and marketing efforts on children who likely suffer “summer reading loss.” A public library would need to develop a marketing plan that identified various market segments (children with different characteristics: those who are not

readers (the alliterates), readers who are currently nonusers of the library (potential customers, infrequent customers, or frequent customers) and the associated marketing approaches that would be effective for each market segment. - Expand its outreach efforts by more innovative and effective marketing. For example, the Torrance (Calif.) Public Library placed stickers on the elementary school report cards going home to parents. The stickers exclaimed: “Vacation reading

equals better grades. Take your child to the public library for summer reading.” As a result of the stickers, the number of children registering for the SRP jumped more than 25 percent.7 - Consider an outreach strategy that takes the SRP to children without requiring children to physically visit the library. Thus, the public library should be reaching out to latchkey programs, day care centers, the YMCA, the Boys and Girls Clubs, summer camp programs, summer recreation programs, and local home schoolers. This approach also suggests setting some specific targets for each market segment rather than relying on total registration numbers.

- Explore the possibility of developing partnerships with the local schools to involve teachers in assessment of the library’s SRP. Linking the results of standardized reading tests with participation in the public library’s SRP can potentially lead to

more funding. - Develop an online version of the SRP that would appeal to yet another segment of the community currently not being served by the library. Online SRPs have been successful in a few public libraries.

The benefits to the children and to the community of a public library’s SRP are significant and positive, especially to those at-risk children who will likely experience “summer reading loss.” The public library must become much more aggressive about reaching more children than the 10 percent of the community that are traditionally frequent library users. The challenge for the library is to evaluate the impacts of the SRP using a broader array of methods and to communicate the results of the evaluation efforts to the community’s stakeholders.

References and Notes

- J. L. Locke, The Effectiveness of Summer Reading Programs in Public Libraries in the United States. PhD Dissertation, 1988, University of Pittsburgh.

- Donna Celano and Susan B. Neuman, The Role of Public Libraries in Children’s Literacy Development: An Evaluation Report (Harrisburg, Pa.: Pennsylvania Library Association, Evaluation and Training Institute, 2001); Evaluation of the Public Library Summer Reading Program: Books and Beyond . . . Take Me to Your Reader! (Los Angeles: Evaluation and Training Institute, 2001).

- Richard M. Orr, “Measuring the Goodness of Library Services,” Journal of Documentation 29, no. 3 (1973): 315–52.

- G. Kylene Beers, “No Time, No Interest, No Way!” School Library Journal, 42, no. 2 (Feb. 1996): 110–14.

- The Web-based survey was graciously hosted by Counting Opinions, a firm that provides comprehensive, cost-effective, real-time solutions designed for libraries, in support of customer insight, operational improvements, and advocacy efforts.

- Walter Minkel, “Making a Splash with Summer Reading: Seven Ways Public Libraries Can Team Up with Schools,” School Library Journal 49, no. 1 (Jan. 2003): 54–56.